Research

Problem

Aside from the struggle of providing an easy to learn and to remember, intuitively constructed front-end for our web-app, which has easily been resolved with some research and exposure to common interaction practices in interface design linked with a drop of creativity, the most important and therefore time-consuming task was to find the most time and quality efficient way to perform the search.

Initial Research

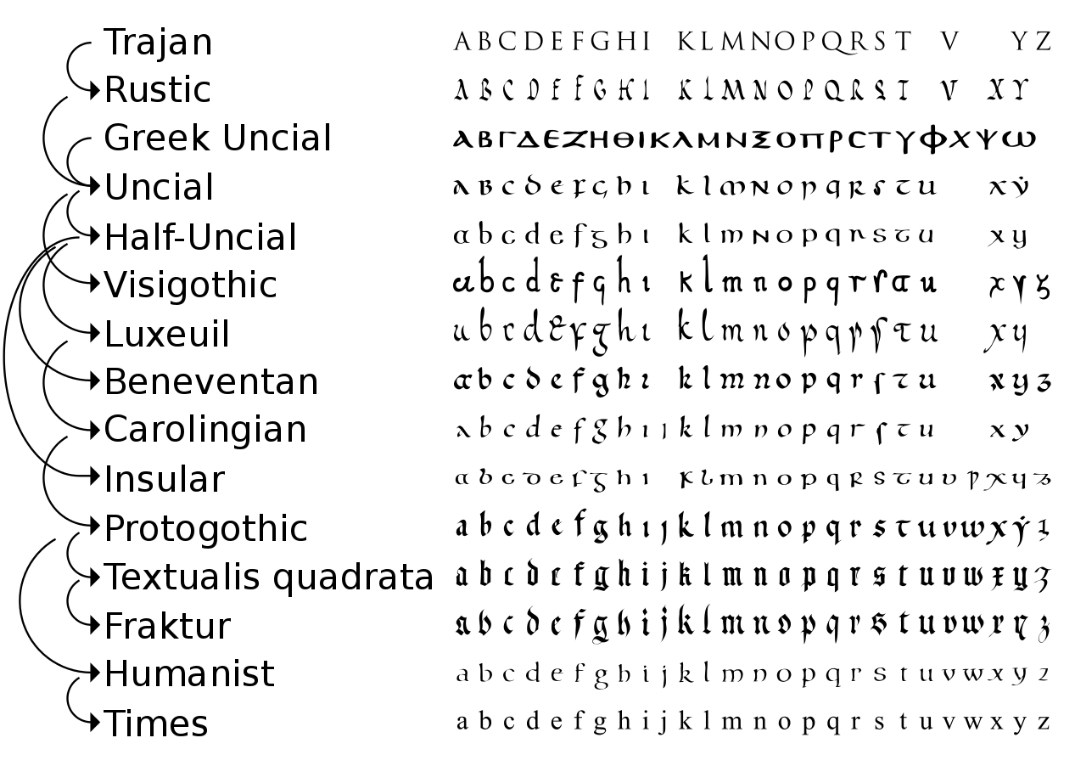

Our initial research consisted of analysis of similar projects on scribal handwriting recognition and their correlation with paleography, to better understand the field in which the application would have been used in. By researching what the paleographers' needs are, we found out the majority of them require a tool to find the occurrences of a specific character in multiple documents.

By analyzing and studying similar projects we refined the idea for our design and outlined our goals to improve in specific sectors such as irregular-organized-text recognition and performance optimization to reduce the computational time.

Technological Research

The Hancock JavaScript Library

This library worked by analyzing the pixel-by-pixel data contained in each of the vertical lines that make up an image and comparing, for all of them, the color progression of the pixels. It did not serve our purpose well and it was very error prone, but it was a good start to decide that we are not trying JavaScript for the backend.

TensorFlow

TensorFlow is a Google product and as well as having lots of support using other web tools and the Google Cloud Platform services as well, which would facilitate an easy deployment of the machine learning model, TensorFlow is also very well documented as it is so popular, the community support is vast and there are lots of useful tutorials with it. These facts moved it to the top of our options ladder therefore we started working with Python.

Scientific Python with Numpy

We discovered the Normalized Cross-Correlation function in the SciPy package, a function that converts two numpy arrays into signals and runs a comparison on them to decide the level of similarity between them.

Deployment Platform

At the very start we were oscillating between designing a desktop based app or a web-app. After debating the decision with our client, having gathered a list of requirements thus achieved a preliminary insight into how the app intends to be used, we have reached the conclusion that a web-based software application would be the best match for our client's needs. The aspects which led to this decision were not only the great flexibility a web-app provides in terms of distributing pieces of the software across the network, storing records and progress regardless of the cold storages, promotes easy access and easy sharing of the resources, but most important of all, this solution is platform independent. It doesn't rely on the processing power of the computer you are using, therefore with a reliable internet connection, the software would have a good performance on one of the old desktop machines that we have seen at the British Library's offices, but also run smoothly on a personal tablet with a stable internet connection.

Again, securing the web platform is a much more straightforward option than handling the installation and possible compatibility issues on numerous different machines. All taken into account, the development process was probably more difficult to start, until we set up all the components, nevertheless, once we got it running, the process has been very efficient and bug-free, boosting our efficiency by letting us concentrate on implementing different features rather than solving irrelevant, machine-related bugs and issues.

Programming Language

As all the machine learning and scientific numeric analysis is mainly done using the python programming language, which is also very straightforward, all our alternatives revolved around using it. Naturally, we decided this to be the main programming language used for image processing.

Machine Learning

This part of the project has taken us on a long trip, with not too many trophies at the end.

Below there could be found a summary of the events that happened.

Preliminary Activity

To be introduced to the project’s topic, we first had a meeting with Dr. Gabriel Brostow where we discussed the potential problems and solutions to the issue. We have agreed to research TensorFlow and the possibilities to apply Machine Learning to our project.

Analyzing from our current perspective, the main idea at that meeting was to build an artificial neural-network model that could be trained to find characters of similar appearance (without accounting for their meaning). Ideally, with more searches and more user profiles, each user could define their own set of aspects which make two characters resembling to each other by using the software and selecting the relevant matches themselves (some historians might look for characters that only have different font, while other archivists might want to find characters that resemble in form but not in meaning). Either way, our opinion is that this was presented as an unsupervised, clustering machine learning process, rather than a supervised, categorizing one, mostly because the machine would not have to understand the meaning of the characters it compares. The highlighted problem with this approach was the ommitance of so called “false-negatives” – selections that the machine does not output to the user as relevant so those do not get to be considered valid training data, therefore content might be lost.

New Possible Features

One of the meetings with our client exposed an aspect that made us feel really excited: we were suggested the potential requirement that eventually, the software could learn the difference in style between a Greek ‘a’ and a Latin ‘a’ and maybe, judging from their distribution within the text, could even predict the language that a paragraph is written in. If that succeeded it would have been a huge booster to the possibilities one could do with our software. It could even point links between documents that professionals did not know existed, mainly because nobody had the time or motivation to search through so many documents as our software did.

However, this meant that the machine could not ignore the meaning of the characters anymore and should have a different understanding of what are the distinguishing aspects judging from the sample data it will be trained with.

Studying Basic Functions with Sci-Kit Learn

Successfully implemented digit recognition by fitting a 'k nearest-neighbors' optimizer to the MNIST dataset as training data. The MNIST dataset is contains 6000 images of handwritten digits from 0 to 9, of aprox. 600 different people. Having this huge amount of data just for 9 digits, we have managed to train a model that got to recognize any newly drawn digit we were showing it (that it has never seen before).

Confident with that experiment, we believed that supervised labeling ML process could be a more feasible approach than the unsupervised, clustering procedure suggested initially, and that the same implementation as in the MNIST example for recognizing digits could be performed for our case, it was a great match for the new requirement presented above as well.

The compromise solution would have been to run NCC until the user labels enough letters so the app gets enough training data to switch to a ML recognition.

We first considered the machine shouldn’t take into consideration the meaning of the characters it analyzes so that each user could train the system with what he/she considers as being a relevant similarity.

However, we found out the hard way that the amount of time needed would be more than unfeasible, so this wouldn’t work.

Starting with the Artificial Neural Networks

Aron, our TA, has persuaded us to approach this using a DCNN as lots of problems such as rotation and size variant are already solved and documented. Specifically he advised to take a VGG-16 pre-trained model and tweak it for our purpose. We successfully set up the VGG 16 layers model in TensorFlow and it did a great job spotting cats and balloons, but more work had to be done for letters.

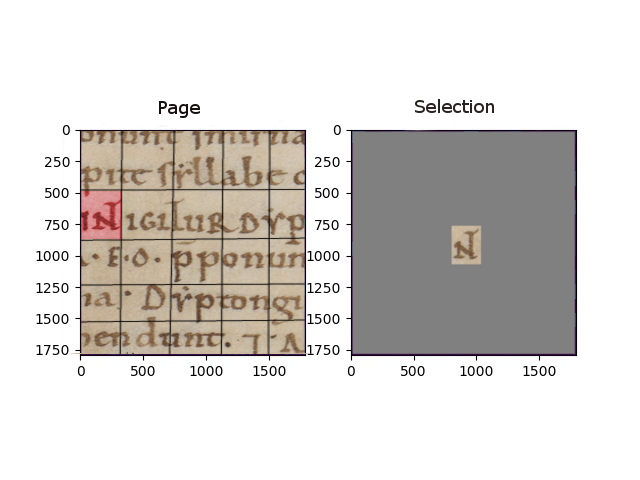

Following the principle that inputting an image into the neural network filters the content for the most relevant information, Aron’s idea was to perform the following experiment: put two images through the network, one consisting of a full page and one a single character, take an intermediate output from one of the non-terminal layers of the convolutional network (layer 5) for both the images. We now have two processed arrays representing the initial inputs. We divide the first one into several patches, character-sized and perform cross-correlation on every patch and to the template (the image with only one character). We take the coordinates of the patch that scored the highest and overlap a heat-map on the original image. The result should be the prediction of where we are more likely to find our template. The below picture is the representation output to the experiment described in this section.

Outcome

Although this has proven to be an interesting solution that could open many doors, we did not have the time or enough reasons that pointed to this solution in order to struggle to deploy it in our final software, as setting up this method would require a whole different range of hosting technologies, of higher price. It is most probable that it would have been worth it if we were able to implement other features that worked with Machine Learning as well, but at the point we have reached when we are supposed to deliver the software, it is not appealing.

Solution

Essentially, we had to design a script that splits a page into several patches that are subsequently compared to the template that has been inputted for search. Relating to this image processing issue, we have eventually chosen the Normalized Cross-Correlation function within the SciPy Python Package to convert the images into signals and perform a technique known as cross-correlation to output a certain degree of resemblance in the images compared. We have wrote our script so that it outputs the results from the most relevant downwards. Using this technology has proven to be reliable, although maybe not the most time-efficient or with the best accuracy, it certainly was a great match for our prototype-based project.