Testing

We performed several different tests throughout our development process and used these to evaluate the state of our project at the time the tests were performed.

Unit Testing

To guarantee that the front-end components individual components worked reliably as designed, a test-driven development approach was used. In particular, unit tests were written to guarantee that the behaviour of components was consistent to the design specification. To perform such tests, we made used of the Jest framework. This testing suite really sped up the development phases thank to his ability of capturing snapshot of the React trees thus allowing us to understand how the internal state changed overtime. For example, this was used to test, in the Character Search Page, that the sliders, toggle buttons and cropping tools correctly updated the state before sending a request to the backend.

Furthermore, we made use of the Enzyme Framework to test Component's boundaries. This was mainly used to test for shallow rendering and propagation. The way we structured the tests consisted of constructing an element and its immediate children, then simulate the user interaction on the parent, and check that the changes propagate correctly to the downwards to the children[1]. Specifically, this was used in the Manuscript Collection Page to test that the values of the individual manuscripts were correctly sent to the child component (the modal) that displayed its pages.

Functional Testing:

Test One: Take a sample from a page, and search for that sample in the page it was gathered from. Expected to find the selected template with an extremely high degree of accuracy, assuming an actual character was selected.

Unit tests were written for the Python script running the normalised cross correlation. This test would work by performing a series of simple steps. First, a page from a manuscript would be selected. Upon selection, a random area of the page was then cropped, to mimic the process of a character being selected. The application would then be run with the cropped area as the search input and the random page as the search field. Following this, the test would proceed to receive the result coordinates, and compare them against each other. Tests would only be considered successful if the resulting coordinates were equal to the ones randomly generated to create the sample. This test allowed us to confirm that the algorithm continued to work after each stage of changes, consistently performing successful tests if this was the case. It is worth noting that the success rates of this form of test falls off quite dramatically once the input sample has a size smaller than approximately 10x10 px. This is the case as at this resolution, a character selected from a handwritten script is unlikely to be distinguishable from random noise in the page. As well as this, with a sample of this size selected at random, it is unlikely that an entire character will be selected, if a part of a character falls in this area at all.

Test Two: Executing a search for a character in a page that is already in the system and has already performed the same character search.

This form of test was performed manually, and would simply involve storing a search of a particular character in a particular document. Results are then compared to see if the algorithm is producing consistent results. Through the different iterations of the application, the exact same search could be performed to identify potential issues in consistency with each update to the algorithm.

Performance Testing:

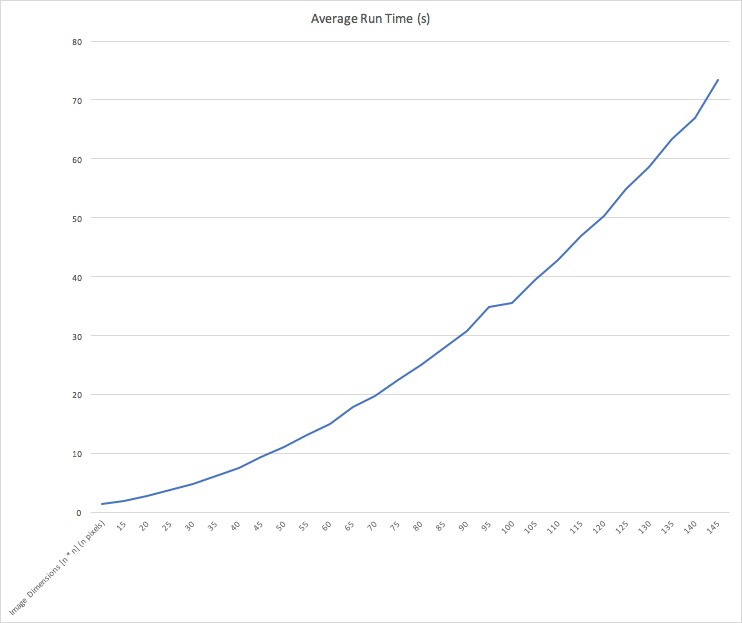

Performance tests were carried out to see how the algorithm performs with varying sized images being selected as the input. Since the document resolution does not typically vary from image to image (typically being somewhere around 1000x1500 px), the tests varied the size of the input. By measuring the amount of time taken for the algorithm to return results, we can identify a trend for the amount of time required for the application runtime.

As seen below, the time taken for processing with regard to a change in input character size, follows an exponent trend:

Limitations to our program have also been identified with damaged or overly rotated characters. Due to the time restrictions we faced during development, implementation of rotations for characters was not included in our final version of the project - this could, however, be added in the future as additional functionality.

Our team did manage to create a relatively basic script for removing damage from certain documents. This scripts run separately to the hosted application, and allows for a user to select a file, and returns a grayscale image of the selected file, with most of the damage removed. This transformation removes damage to a level where our algorithm can recognise characers within the damaged areas.

Stress Testing:

Our stress testing approach was mainly focused on the back-end server. This component, due to limited resources, was a ubuntu virtual machine running on Azure. The goal of this testing phase was to test the number of requestes that the server could handle and what was the limit of manuscript that the CPU could process. To simulate multiple post requests and make assertions on them, we made use of Postman. This allowed us to script post request with specific manuscripts sizes and thus run comparisions and evaluation on the results. Furthermore, the framework allowed us to write Javascript request-specific test to make assertions on the response.

Our findings on the requests limit were expected. Using a single core processor with low clock speed and limited memory, capped the number of maximum request to around 15/20 requests before requiring our intervention. This findings were an extremely insightful result into a future version of the application, since this will need to be considered and improved to allow for a reliable production system.

Compatibility Testing

Another major testing phase, especially when developing a web-based application, is ensuring its compatibility with major internet browsers and their most used versions. We were able to verify compatibility with the following browsers.

| Browser | Compatible Version |

|---|---|

| Microsoft IE | > 9 |

| Microsoft Edge | > 40.15063 |

| Google Chrome | > 61.0.3163 |

| Firefox | > 52 |

| Safari | > 8 |

UI testing on Front-End

The main UI testing techniques that were used on the front-end side of the application were structural testing and interaction testing [2].

Structural Testing

The goal of our structural tests was to ensure that each page presented the specified elements, such as title, forms, inputs fields or image viewers. To do so, we used once again the Jest framework using its snapshot testing functionality. By doing so we were able to reveal hidden code bugs as well as spot dead code sections.

Interaction Testing

Integration testing of the UI element was mainly used to guarantee the correct functioning of redirecting button and form submission. For this task we made use again the Enzyme framework.

User Experience Testing:

All of our user experience tests were carried out with a sample of users that had no prior experience to using the application, to best represent the experience a new user would have when getting to use our program for the first time. The following examples are scenarios of uses and the feedback that we received:

Acceptance Testing: The users accepted the front-end and the user interface as being naturally intuitive, being able to perform the tasks that they sought to without difficulty or guidance. The users could achieve what they aimed to when using the front-end on a tablet device in a similar fashion, showcasing the repsponsive design of the application.

Usability Testing: When presented with a task similar to the use cases mentioned in the requirements, the test user successfully initiated the steps and navigated to the relevant places within the app as to perform the tasks asked of them[3].

Regression Testing: Even though we have entirely changed the technology used for the front-end in the prototype (from Angular to React), as well as altering the look of the interface quite drastically from its original appearance, the structure of the application has remained similar and so the user had no problems switching to the new design.

References

[1] - Fischer, L.M.N., 2017. React for real : front-end code, untangled, [2] - Husmann, M. et al., 2016. UI Testing Cross-Device Applications. Proceedings of the 2016 ACM International Conference on interactive surfaces and spaces, pp.179–188. [3] - Barnum, C.M.M.N., 2011. Usability testing essentials : ready, set-- test!, Amsterdam ; Boston: Morgan Kaufmann Publishers.